Getting Apple’s tiny on-device Foundational Model to pick SF Symbols in Hour by Hour

I’ve been experimenting with Apple’s on-device LLM, and most of what I’ve seen from it has been… absolute nonsense. But, with a cunning trick I got it to achieve greatness.

It is astonishingly dim a lot of the time and will cheerfully hand you rubbish with complete confidence. But that's honestly to be expected - it's a 3-billion parameter model (that err, acts like a 1b model). But I still wanted to see if I could bend it to my will and get something properly useful out of it for my upcoming day planning app Hour by Hour.

My first attempt was to try the most obvious thing: Give it the event title and ask for an SF Symbol. That went about as well as you'd expect. Even large cloud models like GPT-5 hallucinate SF Symbols that do not exist or pick something wildly off target. So, this was entirely expected.

But I did have a modicum of hope that if I gave it a list of only 12 SF Symbols to choose from, it might do the right thing. Right? Right???

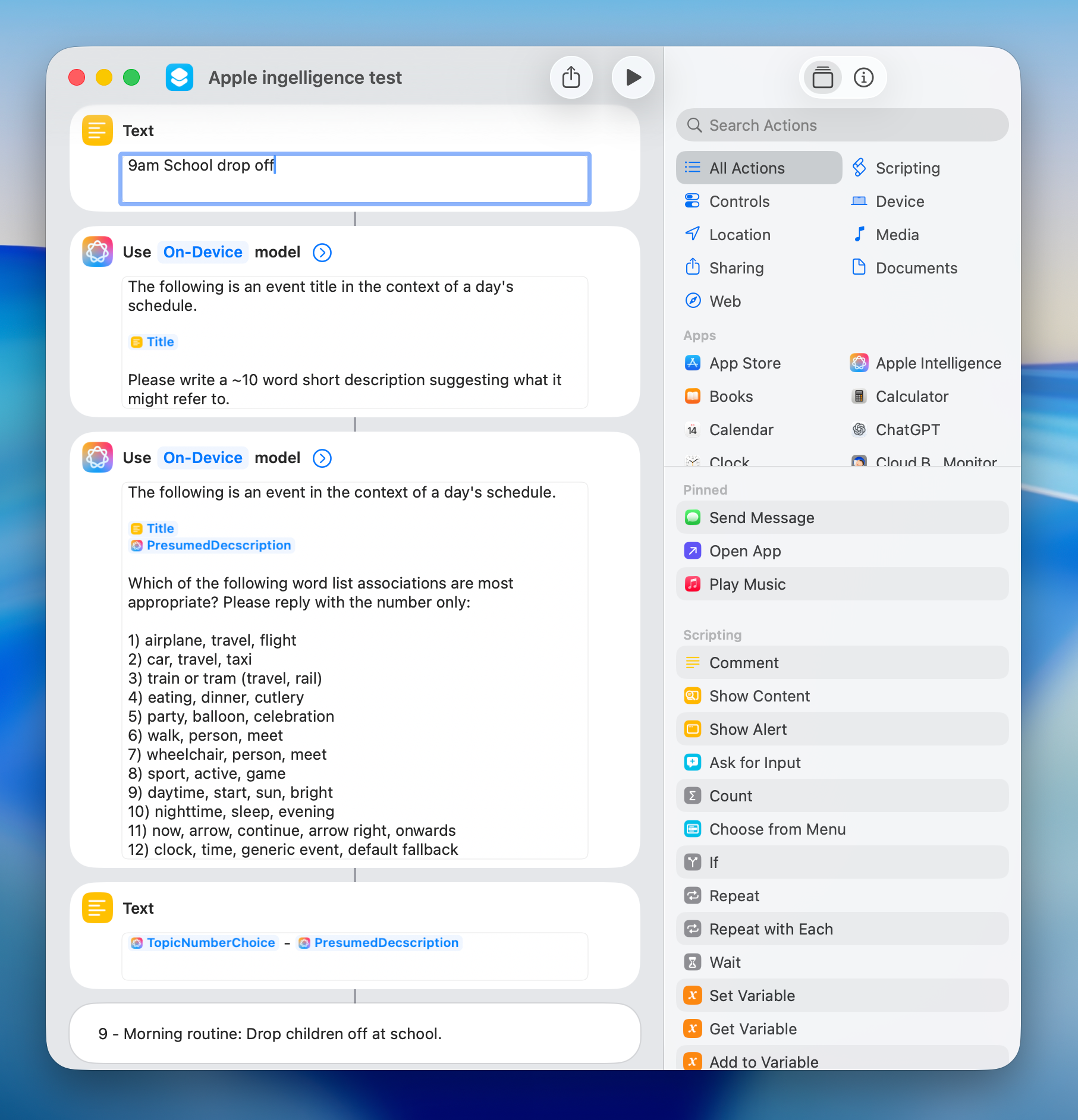

(One thing that genuinely helped in the early stages was the fact that the Shortcuts app has Apple Intelligence actions. It’s a great place to prototype prompts quickly without wiring anything into my code. I could tweak phrasing, see how the model behaved, and very quickly learn what it was hopeless at and what it could just about handle. Most of my dead ends and small breakthroughs happened in Shortcuts long before I wrote a line of Swift.)

I realised that it doesn't actually know what SF Symbols look like, or the concepts that they're intended to represent. So I tried changing the multiple choice to a semantic word list, and numbered each - which I would then turn into an SF Symbol. I also found that asking it to start by writing a brief description of the event helped as a sort of short "thinking phase" to expand on what a terse event title might refer to:

This worked... more than 50% of the time? But I want to hit at least ~95% accuracy, not 60%. Also, this is a very short list of possible symbols.

By the time you get to around 4-6 choices it's pretty reliable. So I considered splitting SF Symbols into nested tiers of categories, effectively having it navigate through menus to find the right symbol. It may have worked in theory but was far too slow and fragile. If each step takes about a second you end up waiting several seconds just to get an icon, and the categorisation itself is never perfectly clean.

🤓 Emojis are always the answer 🤯

So I took a step back and thought about what LLMs are trained on - the internet. Emoji. They swim in emoji. There is a mountain of training data online where people use emoji contextually with everyday language. Emoji are also short to produce which makes the prompts fast.

The new idea was simple. Ask the model for one emoji that represents the event title. Nothing else. No commentary. No fuss. That single change fixed almost everything. The prompt is tiny, the output is tiny, and the model is very confident when choosing emoji.

But I didn't actually want to use emoji - they weren't the tone or style I was going for - I wanted to use Apple's elegant vector SF Symbols. So I built what is now the secret sauce that makes the whole feature work. A giant dictionary that maps emoji to SF Symbols. In practice I author it backwards: as a limited number of SF Symbol names that map to a long string of possible emoji, which I then create a reverse mapping for on load. When the model produces an emoji, I look it up in the dictionary and convert it to the SF Symbol it belongs to:

let sfSymbolToEmojiMapping = [

("fork.knife", "🍽️🍴🥄🍕🍔🍟🥗🥪🍣🍜🍝🌮🌯🥘🍲🍱🥙🌭🥟🥠🥡🥨🥯🥞🧇🧀🍖🍗🥩🥓🧈🥐🥖🫓🍞🥢🍳👩🍳"),

...

So if your event title contains “eat tacos”, the model will likely produce the taco emoji which then maps neatly to fork.knife.

Most of the time this gives lovely results. Occasionally it does something ridiculous. “Eat breakfast” once came back as an 🥑 avocado emoji (?!) That then mapped to carrot.fill because I included all the vegetable and healthy eating emoji under that symbol. So breakfast briefly became a carrot. Fair enough. Breakfast is what you make of it.

Known bugs – "Start work" should really have something like a briefcase, and it shouldn't default to having the previous icon for newly created events

The best part is that this is incredibly fast. The on-device model only gets a small prompt, and there is no heavy back and forth with the cloud. It behaves well, feels instant, and makes Hour by Hour nicer to use without draining power or requiring a connection.

I wanted Apple Intelligence to feel genuinely helpful and not like a gimmick. With this emoji trick it finally clicked into place. A tiny model can do something surprisingly clever if you ask it for the right thing.